Numerous novel explanation techniques have been developed for explainable AI (XAI). How can developers choose which technique to implement for various users and use cases? Which explanations would be more suitable for specific user goals? We present the XAI Framework of Reasoned Explanations to help guide how to choose different XAI feature based on user goals and human reasoning methods and biases.

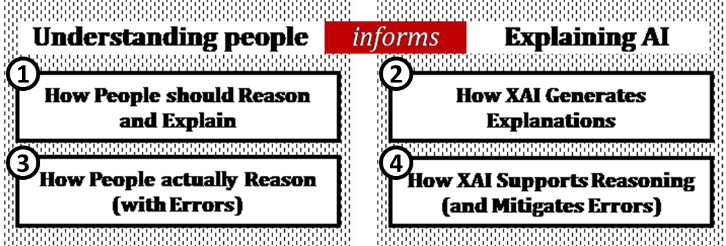

The framework describes how people reason rationally ① and heuristically but subject to cognitive biases ③, how XAI facilities do support specific rational reasoning processes ②, and can be designed to target decision errors ④. The framework identifies pathways between human reasoning and XAI facilities that can help organize explanations and identify gaps to develop new explanations given an unmet reasoning need.

Intelligibility Question Types [Lim 2009, Lim 2010], Interpretable Decision Sets [Lakkaraju], MMD-Critic [Kim], SHAP [Lundberg 2017], TCAV [Kim 2018], etc.

The conceptual XAI framework for Reasoned Explanations describes how human reasoning processes (left) informs XAI techniques (right).

Each bullet point describe different constructs: theories of reasoning, XAI techniques, and strategies for designing XAI. Elements describe specific elements for each construct.

**Click on a construct or element word to reveal pathways connecting elements between quadrants.**

This will reveal pathways between framework quadrants and also reveal the explanation as implemented in the example visualization.

Arrows indicate pathway connections: red arrows for how theories of human reasoning inform XAI features, and grey arrows for inter-relations between different reasoning processes and associations between XAI features.

For example, hypothetico-deductive reasoning can be interfered by System 1 thinking and cause confirmation bias (grey arrow). Confirmation bias can be mitigated (follow the red line) by presenting information about the prior probability or input attributions. Next, we can see that input attributions can be implemented as lists and visualized using tornado plots (follow the grey line).