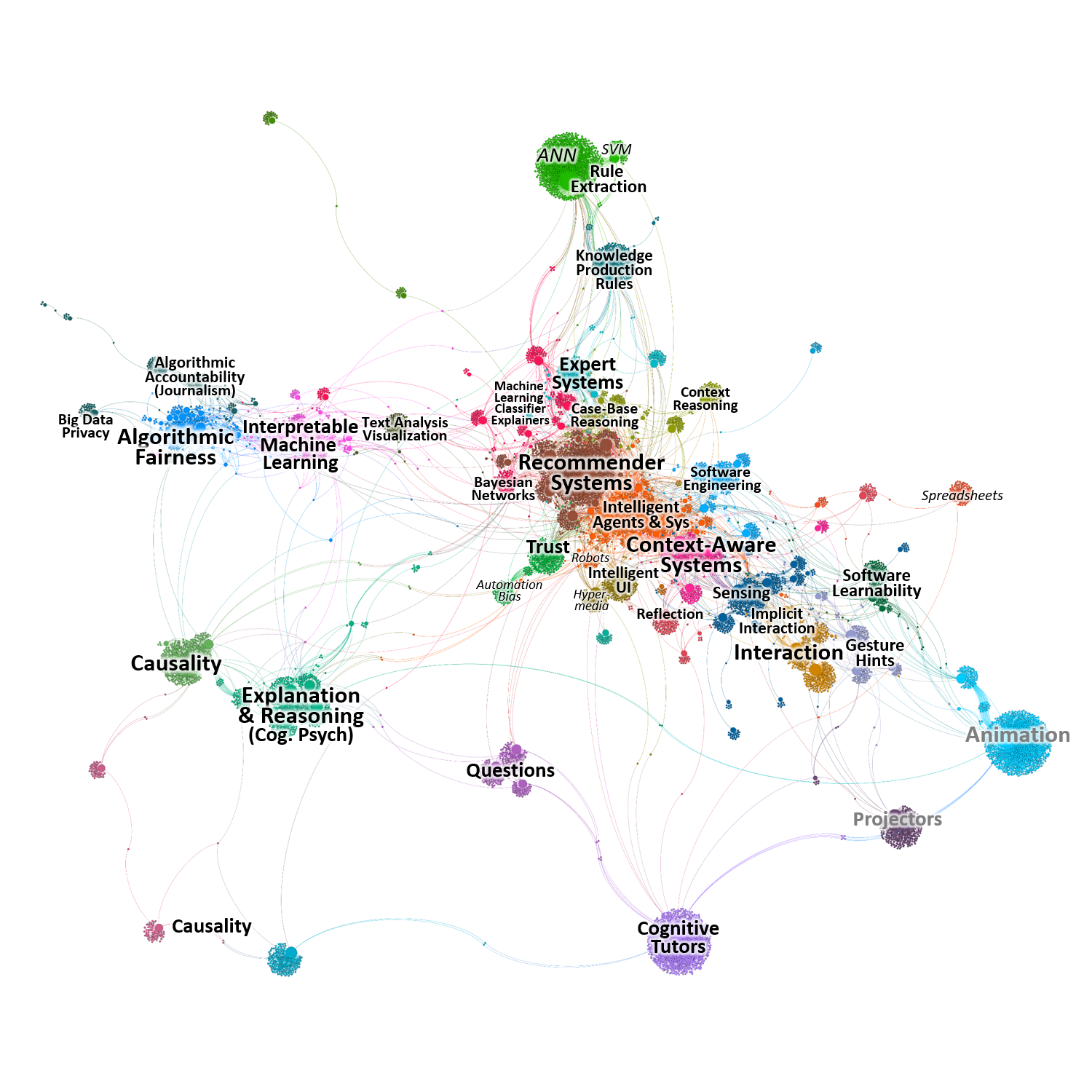

Advances in artificial intelligence, sensors and big data management have far-reaching societal impacts. As these systems augment our everyday lives, it becomes increasingly important for people to understand them and remain in control. We investigate how HCI researchers can help to develop accountable systems by performing a literature analysis of 289 core papers on explanations and explainable systems, as well as 12,412 citing papers. Using topic modeling, co-occurrence and network analysis, we mapped the research space from diverse domains, such as algorithmic accountability, interpretable machine learning, context-awareness, cognitive psychology, and software learnability. We reveal fading and burgeoning trends in explainable systems, and identify domains that are closely connected or mostly isolated. The time is ripe for the HCI community to ensure that the powerful new autonomous systems have intelligible interfaces built-in. From our results, we propose several implications and directions for future research towards this goal.

Paper:

- Abdul, A., Vermeulen, J., Wang, D., Lim, B. Y. (corresponding author), Kankanhalli, M. 2018. Trends and Trajectories for Explainable, Accountable and Intelligible Systems: An HCI Research Agenda. In Proceedings of the international Conference on Human Factors in Computing Systems. CHI ’18.