Feature attribution is widely used to explain how influential each measured input feature value is for an output inference. However, measurements can be uncertain, and it is unclear how the awareness of input uncertainty can affect the trust in explanations.

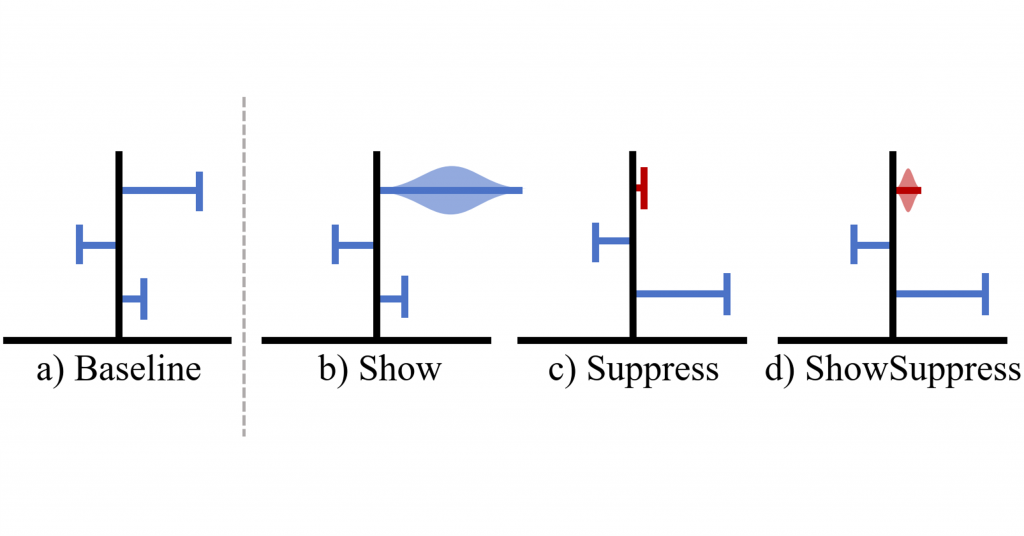

We propose and study two approaches to help users to manage their perception of uncertainty in a model explanation: 1) transparently show uncertainty in feature attributions to allow users to reflect on, and 2) suppress attribution to features with uncertain measurements and shift attribution to other features by regularizing with an uncertainty penalty.

Through simulation experiments, qualitative interviews, and quantitative user evaluations, we identified the benefits of moderately suppressing attribution uncertainty, and concerns regarding showing attribution uncertainty.

Congratulations to team members Danding Wang and Wencan Zhang!

Artificial Intelligence Journal Video Preview:

IJCAI 2021 Presentation:

Danding Wang, Wencan Zhang, Brian Y. Lim. 2021. Show or Suppress? Managing Input Uncertainty in Machine Learning Model Explanations. Artificial Intelligence.