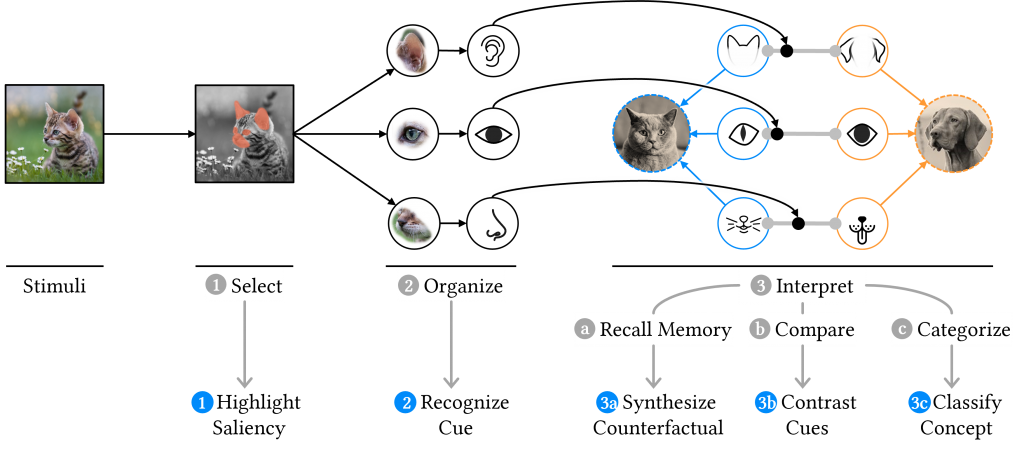

Explainable AI is important to build trustworthy and understandable systems. To aid understanding, explanations need to be relatable, but current techniques remain overly technical with obscure information. Drawing from the perceptual processing theory of human cognition, we define the XAI Perceptual Processing framework to provide unified, relatable explanations in AI. We aim to create interpretable AI models that are human-like.

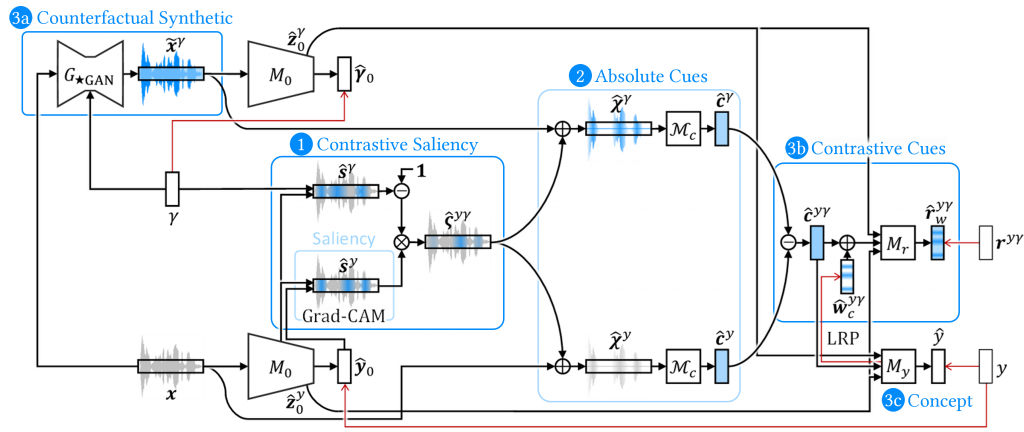

We implemented a deep learning model, RexNet, to explain with relatable examples, attentions, and cues. Technically, these are provided as Contrastive Saliency, Counterfactual Synthetic examples, and Contrastive Cues explanations.

For example, our system can explain why an AI predicted that a person’s voice was happy by generating a sad version of the speech, identifying where to focus on, and describing how their cues differed, such as higher volume and higher pitch. Our evaluations show that RexNet improves model performance, explanation fidelity, perceived usefulness and human-AI decision making.

This provides human-relatable explanations to improve the understanding and trust of AI for many applications.

Our paper received the Best Paper Award for the Top 1% of papers at CHI’22. Congratulations Wencan!

Video Preview:

CHI presentation:

Wencan Zhang and Brian Y. Lim. 2022. Towards Relatable Explainable AI with the Perceptual Process. In Proceedings of the international Conference on Human Factors in Computing Systems. CHI ’22 . Best Paper Award (Top 1%).

Image credit: “dog face” and “cat face” by irfan al haq, “Dog” by Maxim Kulikov, “cat mouth” by needumee from the Noun Project.