The wide-spread use of artificial intelligence has spurred much interest to ensure that we can understand and trust these intelligent systems. As a burgeoning field, explainable AI (XAI) has had a rapid pace of research and development, but research on explaining intelligent systems has been progressing for decades across many domains spanning computer science, cognitive psychology, and human-computer interaction.

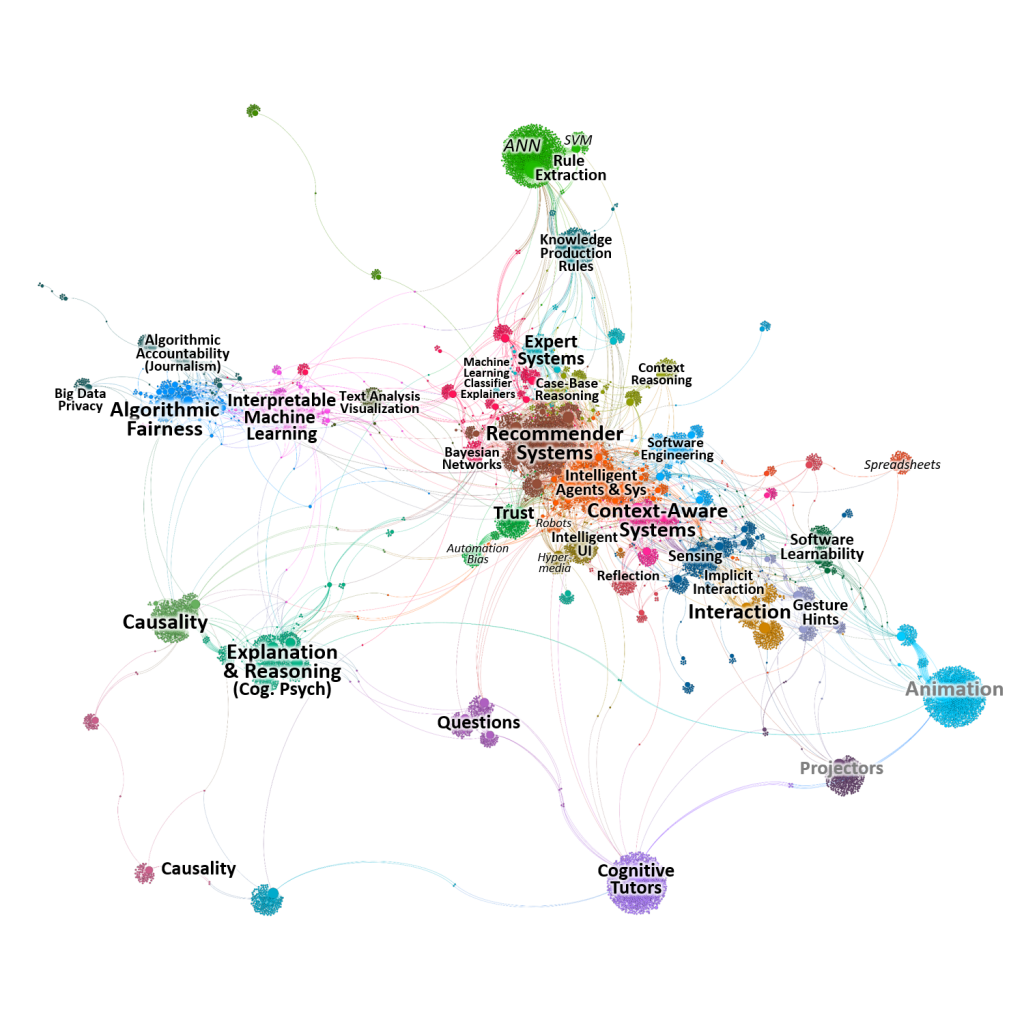

We analyzed over 12,000 papers to identify research trends and trajectories. In particular, as AI becomes more commonplace, there is a strong need for human-interpretability and we argue that closer collaboration between AI and HCI is needed.

Abdul, A., Vermeulen, J., Wang, D., Lim, B. Y. (corresponding author), Kankanhalli, M. 2018. Trends and Trajectories for Explainable, Accountable and Intelligible Systems: An HCI Research Agenda. In Proceedings of the international Conference on Human Factors in Computing Systems. CHI ’18.