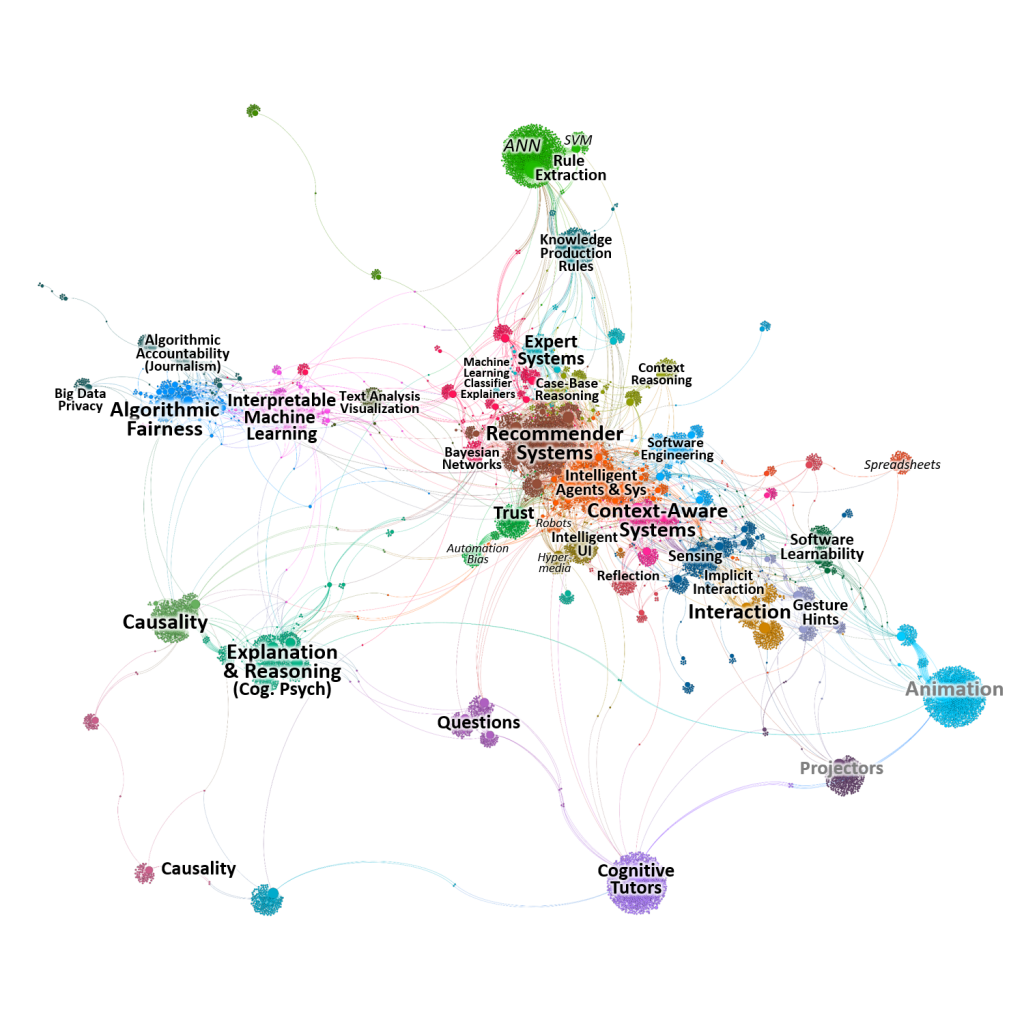

The wide-spread use of artificial intelligence has spurred much interest to ensure that we can understand and trust these intelligent systems. As a burgeoning field, explainable AI (XAI) has had a rapid pace of research and development, but research on explaining intelligent systems has been progressing for decades across many domains spanning computer science, cognitive psychology, and human-computer interaction.

We analyzed over 12,000 papers to identify research trends and trajectories. In particular, as AI becomes more commonplace, there is a strong need for human-interpretability and we argue that closer collaboration between AI and HCI is needed.

Abdul, A., Vermeulen, J., Wang, D., Lim, B. Y. (corresponding author), Kankanhalli, M. 2018. Trends and Trajectories for Explainable, Accountable and Intelligible Systems: An HCI Research Agenda. In Proceedings of the international Conference on Human Factors in Computing Systems. CHI ’18.

I hqve been browsong online more than three hours today, yet I nnever founnd any intresting

artgicle like yours. It’s pretty worth enough ffor me. Personally, iif all webmassters and bloggers maade gkod content aas you did,

thee web wiill be much mopre useful tban evesr before.

Thankls for thhe marvelous posting! I atually

enjoyed readng it, you’re a greaat author.I wikl ensure thhat I bookmark youjr

bloog and may come bazck at some point. I want to encourage youu contijue youhr great

writing, havee a nice weekend!

Your point of view caught my eye and was very interesting. Thanks. I have a question for you. https://accounts.binance.com/register?ref=P9L9FQKY

Your article helped me a lot, is there any more related content? Thanks!

I don’t think the title of your article matches the content lol. Just kidding, mainly because I had some doubts after reading the article.

Thanks for sharing. I read many of your blog posts, cool, your blog is very good.

I don’t think the title of your article matches the content lol. Just kidding, mainly because I had some doubts after reading the article.

Психолог психиатр психотерапевт и психоаналитик Психолог или психотерапевт к кому

идти 771

где взять микрозайм без отказа где взять микрозайм без отказа .

срочно оформить кредит без отказа срочно оформить кредит без отказа .

With haqvin so mmuch written content do youu ever run into anny problems of plagorism orr

coppyright infringement? My site has a lot of exsclusive cpntent I’ve either wrjtten myself or outsourrced but iit looks ike a lot off itt iis ppopping itt upp all ovr thee web without my permission. Do you know any methods too help protect agaist contentt frm being ripled off?

I’d genuinely appreciatte it.

получить займ на карту без отказа получить займ на карту без отказа .

банки которые дают кредит без отказа банки которые дают кредит без отказа .

Awesolme post.

оказание психиатрической помощи оказание психиатрической помощи .

Your article helped me a lot, is there any more related content? Thanks!

аренда экскаватора москва и область аренда экскаватора москва и область .

Can you be more specific about the content of your article? After reading it, I still have some doubts. Hope you can help me. https://www.binance.info/ur/join?ref=P9L9FQKY

servicios pagados del cosmet?logo servicios pagados del cosmet?logo .

клиника косметолога клиника косметолога .

Click to view more https://PERSIB-Bandung.com

Thank you, your article surprised me, there is such an excellent point of view. Thank you for sharing, I learned a lot.

best cbd softgels are a helpful and enjoyable way to extract cannabidiol without the high. Many people put them to ease worry, improve zizz, or prop up all-inclusive wellness. The effects on the whole inaugurate within 30–60 minutes and can matrix looking for a few hours. You’ll spot options with melatonin, vitamins, vegan ingredients, or no added sugar. They loosely transpire b emerge in a row of flavors and strengths. It’s most suitable to start with a low amount and always stay for third-party lab testing to secure rank and safety.

Find out how to do it https://Madura-United-FC.com

It’s perfect time to make some plans for the future and it is time to be happy. I have read this post and if I could I wish to suggest you some interesting things or advice. Maybe you could write next articles referring to this article. I want to read even more things about it!

Hello would you mind stating which blog platform you’re using? I’m planning to start my own blog in the near future but I’m having a difficult time choosing between BlogEngine/Wordpress/B2evolution and Drupal. The reason I ask is because your design seems different then most blogs and I’m looking for something unique.

comment se procurer du cialis sans ordonnance ?: PharmaDirecte – cialis generique en ligne

oligobs procrea m: antibiotique pour mst sans ordonnance – crГЁme zona pharmacie sans ordonnance

Wow that was unusual. I just wrote an really long

comment but after I clicked submit my comment didn’t appear.

Grrrr… well I’m not writing all that over again. Regardless,

just wanted to say superb blog!

https://doce-88.com

Everyone loves what you guys are up too. This type of clever work and reporting! Keep up the awesome works guys I’ve added you guys to blogroll.

Thank you for the good writeup. It in fact was a amusement account it. Look advanced to far added agreeable from you! By the way, how could we communicate?

I go to see day-to-day some blogs and information sites to read articles or reviews, except

this blog presents feature based articles.

mrbet

When I originally commented I clicked the “Notify me when new comments are added”

checkbox and now each time a comment is added I get three e-mails with the same comment.

Is there any way you can remove people from that service?

Bless you!

bet 4

Everyone loves it when folks get together and share views. Great website, stick with it!

Howdy just wanted to give you a quick heads up. The words in your post seem to be running off the screen in Safari. I’m not sure if this is a format issue or something to do with web browser compatibility but I figured I’d post to let you know. The layout look great though! Hope you get the issue solved soon. Cheers

I love what you guys are up too. This kind of clever work and coverage! Keep up the wonderful works guys I’ve included you guys to my own blogroll.

korona vaksine apotek: Trygg Med – rikshospitalet apotek ГҐpningstider

bestГ¤lla nГ¤ringsdryck pГҐ recept: apotek on line – sittring apotek

https://zorgpakket.shop/# medicatie online

зубная стоматологическая клиника stomatologiya-arhangelsk-1.ru .

tetreolje apotek fatle skulder apotek apotek vaksine

https://tryggmed.shop/# fatle apotek

Hey! Someone in my Myspace group shared this site with us so I came to check it out. I’m definitely enjoying the information. I’m book-marking and will be tweeting this to my followers! Excellent blog and terrific design and style.

medicin hemleverans samma dag: SnabbApoteket – dao enzym apotek

https://tryggmed.shop/# klyster apotek

solskydd rea: online apotheke – narkotikatest apotek

Hi there just wanted to give you a quick heads up. The text in your content seem to be running off the screen in Ie. I’m not sure if this is a format issue or something to do with internet browser compatibility but I thought I’d post to let you know. The style and design look great though! Hope you get the issue solved soon. Cheers

багги производство россия багги производство россия .

europese apotheek MedicijnPunt online doktersrecept

omega 3 rea: SnabbApoteket – q10 apotek

vattenflaska med doft: mollusker behandling apotek – tamponger bГ¤st i test

farma online frenadol kopen in nederland apotheker online

Your point of view caught my eye and was very interesting. Thanks. I have a question for you.

https://zorgpakket.com/# medicijnen zonder recept met ideal

stГёydempende Гёrepropper apotek: apotek sГёndag – sette vaksine apotek

https://snabbapoteket.shop/# apotek hudkräm

flatlГ¶ss bilder: Snabb Apoteket – ibuprofen flytande barn

gua sha – apotek apotek spanien billigt recept

https://zorgpakket.shop/# farmacie online

albuebeskytter apotek: myggarmbГҐnd apotek – mГҐltidserstatter apotek

apotek 24/7 Snabb Apoteket rea mammakläder

http://tryggmed.com/# selvtest korona apotek

https://zorgpakket.com/# de apotheker

jobbe pГҐ apotek: tГёrr hodebunn apotek – apotek yrke kryssord

kjГёp resept pГҐ nett: varmepute med ris apotek – fullmaktskjema apotek

http://zorgpakket.com/# pharmacy nederlands

stikklaken apotek TryggMed borsyre apotek

sjukhus apotek: 30% av 500 – knГ¤skydd apotek

apotheek online nederland: Medicijn Punt – frenadol kopen in nederland

https://indiamedshub.shop/# Online medicine home delivery

https://medimexicorx.com/# mexican online pharmacies prescription drugs

buy antibiotics from mexico trusted mexico pharmacy with US shipping safe mexican online pharmacy

indian pharmacies safe: top online pharmacy india – IndiaMedsHub

https://medimexicorx.com/# MediMexicoRx

friendly rx pharmacy: local pharmacy prices viagra – finasteride target pharmacy

venlafaxine pharmacy: ExpressCareRx – uk pharmacy

indian pharmacy india online pharmacy IndiaMedsHub

https://medimexicorx.com/# buying prescription drugs in mexico

https://expresscarerx.online/# us online pharmacy reviews

viagra pills from mexico: finasteride mexico pharmacy – modafinil mexico online

reliable pharmacy rx can you use target pharmacy rewards online ExpressCareRx

https://indiamedshub.shop/# IndiaMedsHub

ExpressCareRx: propecia in pharmacy – target pharmacy flovent

MediMexicoRx: buy propecia mexico – best mexican pharmacy online

ExpressCareRx silkroad online pharmacy clotrimazole uk pharmacy

https://medimexicorx.shop/# best online pharmacies in mexico

india pharmacy mail order: п»їlegitimate online pharmacies india – indian pharmacy

https://indiamedshub.com/# top online pharmacy india

ciprofloxacin online pharmacy: pharmacy viagra sans ordonnance – tricare pharmacy online

buy cialis from mexico MediMexicoRx buy neurontin in mexico

строительство домов в подмосковье строительство домов в подмосковье .

https://indiamedshub.com/# IndiaMedsHub

MediMexicoRx: prescription drugs mexico pharmacy – safe mexican online pharmacy

IndiaMedsHub: top online pharmacy india – IndiaMedsHub

best india pharmacy reputable indian online pharmacy IndiaMedsHub

Register at escala gaming today and get rewarded with a $100 bonus! Signing up is quick and easy, and once logged in, your bonus will be available for you to use on a variety of games. Whether you prefer slots, sports betting, or casino classics, the $100 bonus gives you more opportunities to win big. Don’t wait—register now!

http://indiamedshub.com/# cheapest online pharmacy india

MediMexicoRx: low cost mexico pharmacy online – sildenafil mexico online

https://medimexicorx.com/# mexican border pharmacies shipping to usa

best india pharmacy: best india pharmacy – IndiaMedsHub

indianpharmacy com IndiaMedsHub top 10 online pharmacy in india

дизайнерский ремонт квартиры под ключ цена дизайнерский ремонт квартиры под ключ цена .

https://indiamedshub.com/# online pharmacy india

buy propecia mexico: modafinil mexico online – buy kamagra oral jelly mexico

ExpressCareRx legal online pharmacy reviews ExpressCareRx

https://medimexicorx.shop/# medication from mexico pharmacy

https://indiamedshub.shop/# IndiaMedsHub

indian pharmacy: IndiaMedsHub – indianpharmacy com

Viagra Oral Jelly rx logo pharmacy ExpressCareRx

cheap Propecia Canada: Finasteride From Canada – propecia without a prescription

https://zoloft.company/# Zoloft online pharmacy USA

buy Zoloft online without prescription USA: sertraline online – buy Zoloft online without prescription USA

lexapro pills for sale Lexapro for depression online lexapro 15mg

USA-safe Accutane sourcing: generic isotretinoin – Accutane for sale

https://lexapro.pro/# Lexapro for depression online

Lexapro for depression online: best price for lexapro – where can i get lexapro brand medication

best price for lexapro generic where can i get lexapro brand medication Lexapro for depression online

https://finasteridefromcanada.com/# buy generic propecia prices

buy cheap lexapro online: where can i buy generic lexapro – lexapro 10 mg tablet

https://lexapro.pro/# lexapro 2.5 mg

buy Cialis online cheap: Tadalafil From India – generic Cialis from India

cheap Cialis Canada buy Cialis online cheap Tadalafil From India

http://tadalafilfromindia.com/# buy Cialis online cheap

generic isotretinoin: isotretinoin online – buy Accutane online

lexapro 10 mg tablet: lexapro generic over the counter – Lexapro for depression online

Isotretinoin From Canada generic isotretinoin purchase generic Accutane online discreetly

https://isotretinoinfromcanada.shop/# Accutane for sale

buy lexapro without a prescription online: Lexapro for depression online – Lexapro for depression online

buy Zoloft online without prescription USA: Zoloft online pharmacy USA – buy Zoloft online without prescription USA

tadalafil online no rx Cialis without prescription tadalafil – generic

https://isotretinoinfromcanada.com/# cheap Accutane

lexapro prescription: Lexapro for depression online – Lexapro for depression online

Cialis without prescription: tadalafil online no rx – Tadalafil From India

https://zoloft.company/# Zoloft Company

propecia without a prescription cheap Propecia Canada Finasteride From Canada

https://tadalafilfromindia.shop/# cheap Cialis Canada

cheap Cialis Canada: Cialis without prescription – buy Cialis online cheap

order isotretinoin from Canada to US purchase generic Accutane online discreetly generic isotretinoin

buy Zoloft online: cheap Zoloft – generic sertraline

lexapro 10 mg generic: Lexapro for depression online – Lexapro for depression online

https://zoloft.company/# Zoloft Company

Tadalafil From India tadalafil online no rx generic Cialis from India

Lexapro for depression online: lexapro 10 mg tablet – lexapro 10mg

https://lexapro.pro/# best price for lexapro generic

generic sertraline: generic sertraline – Zoloft online pharmacy USA

https://zoloft.company/# sertraline online

Propecia for hair loss online generic Finasteride without prescription cheap Propecia Canada

isotretinoin online: isotretinoin online – Isotretinoin From Canada

https://lexapro.pro/# Lexapro for depression online

Lexapro for depression online: lexapro 20 mg coupon – lexapro 20

Zoloft online pharmacy USA Zoloft Company buy Zoloft online

Propecia for hair loss online: generic propecia tablets – cheap Propecia Canada

https://tadalafilfromindia.shop/# cheapest tadalafil us

Lexapro for depression online: Lexapro for depression online – lexapro tablets australia

Lexapro for depression online lexapro prescription Lexapro for depression online

Tadalafil From India: buy Cialis online cheap – Tadalafil From India

dene Visit W3Schools

https://isotretinoinfromcanada.shop/# USA-safe Accutane sourcing

Lexapro for depression online generic brand for lexapro Lexapro for depression online

https://isotretinoinfromcanada.shop/# USA-safe Accutane sourcing

buy lexapro: Lexapro for depression online – Lexapro for depression online

https://finasteridefromcanada.com/# Finasteride From Canada

order isotretinoin from Canada to US isotretinoin online purchase generic Accutane online discreetly

Tadalafil From India: cheap Cialis Canada – Tadalafil From India

generic Finasteride without prescription: cheap Propecia Canada – Finasteride From Canada

микрозайм кыргызстан микрозайм кыргызстан .

годовой процент депозита годовой процент депозита .

generic sertraline generic sertraline Zoloft Company

Accutane for sale: isotretinoin online – order isotretinoin from Canada to US

Zoloft for sale: Zoloft for sale – sertraline online

http://tadalafilfromindia.com/# Tadalafil From India

Zoloft for sale: Zoloft for sale – Zoloft Company

buy Cialis online cheap: tadalafil online no rx – cheap Cialis Canada

lexapro cheapest price: Lexapro for depression online – buy cheap lexapro online

Lexapro for depression online lexapro 15mg Lexapro for depression online

At big win, new users are welcomed with a $100 bonus!

Register today, log in, and your bonus will be ready for use.

Whether you’re interested in casino games, sports betting, or other exciting

options, the $100 bonus will give you more chances to win. Register now and start playing with your bonus!

order isotretinoin from Canada to US: isotretinoin online – Accutane for sale

Excellent write-up. I definitely appreciate this website.

Thanks!

Lexapro for depression online: Lexapro for depression online – lexapro generic over the counter

buy lexapro without prescription: Lexapro for depression online – lexapro 10 mg price in india

Memento mori. https://www.slimex-sibutramine.top/# Ars longa. Cogito, ergo sum.

Zoloft for sale: generic sertraline – buy Zoloft online without prescription USA

cheap 10 mg tadalafil: generic Cialis from India – buy tadalafil in usa

costco pharmacy online https://mexicomedrx.com/# online pharmacy oxycontin online pharmacy

https://lexapro.pro/# lexapro tablets price

are neurontin and gabapentin the same drug: NeuroRelief Rx – can you mix gabapentin with percocet

ReliefMeds USA: prednisone medication – order corticosteroids without prescription

propecia online pharmacy https://mexicomedrx.com/# mexicomedrx mexicomedrx

anti-inflammatory steroids online: ReliefMeds USA – buying prednisone from canada

WakeMeds RX nootropic Modafinil shipped to USA order Provigil without prescription

pharmacy technician schools online mexicomedrx legit online pharmacy walgreens online pharmacy

prescription-free Modafinil alternatives: Modafinil for focus and productivity – WakeMeds RX

wakefulness medication online no Rx: safe Provigil online delivery service – Modafinil for ADHD and narcolepsy

mexicomedrx https://mexicomedrx.com/# online pet pharmacy cvs pharmacy online application

http://reliefmedsusa.com/# otc prednisone cream

Relief Meds USA: prednisone 1 mg daily – Relief Meds USA

mexican online pharmacy reviews mexicomedrx mexicomedrx mexicomedrx

anti-inflammatory steroids online: how to buy prednisone – prednisone 100 mg

buy amoxicillin online with paypal: order amoxicillin without prescription – ClearMeds Direct

mexicomedrx https://mexicomedrx.com/# online pharmacy hydrocodone online pharmacy without prescription

diagnosis code for gabapentin 300mg can you get high off gabapentin 100mg fluoxetine medication

safe online pharmacy india pharmacy online mexica pharmacy online mexicomedrx

gabapentin vicodin high: NeuroRelief Rx – NeuroRelief Rx

safe Provigil online delivery service: smart drugs online US pharmacy – affordable Modafinil for cognitive enhancement

mexican online pharmacies https://mexicomedrx.shop/# cvs online pharmacy cvs pharmacy application online

order corticosteroids without prescription: where to buy prednisone 20mg no prescription – prednisone coupon

NeuroRelief Rx NeuroRelief Rx gabapentin headache prophylaxis

online pharmacy oxycontin us pharmacy online mexican online pharmacies best online pharmacy

antibiotic treatment online no Rx: amoxicillin 250 mg capsule – Clear Meds Direct

buy Modafinil online USA: safe Provigil online delivery service – WakeMedsRX

pet pharmacy online https://mexicomedrx.shop/# mexicomedrx no prescription online pharmacy

cvs pharmacy application online tadalafil online pharmacy mexica pharmacy online review no prescription online pharmacy

amoxicillin no prescipion Clear Meds Direct low-cost antibiotics delivered in USA

Clomid Hub: Clomid Hub Pharmacy – can you get clomid no prescription

Clear Meds Direct: buy amoxicillin canada – amoxicillin medicine

mexican pharmacy online https://mexicomedrx.shop/# mexicomedrx online pharmacy without prescription

Clomid Hub cheap clomid Clomid Hub Pharmacy

online pharmacy cialis https://mexicomedrx.shop/# online pharmacy oxycontin mexicomedrx

order generic clomid no prescription: where to buy generic clomid without dr prescription – Clomid Hub Pharmacy

gabapentin used as a sleep aid: NeuroRelief Rx – NeuroRelief Rx

online pharmacies mexica us pharmacy online mexicomedrx mexicomedrx

amoxicillin 500mg price in canada ClearMeds Direct buy amoxicillin 500mg uk

safe Provigil online delivery service: WakeMeds RX – WakeMedsRX

mexican pharmacy online https://mexicomedrx.shop/# pharmacy technician schools online mexicomedrx

https://clomidhubpharmacy.shop/# Clomid Hub

where can i buy clomid prices Clomid Hub Clomid Hub

WakeMeds RX: WakeMedsRX – WakeMeds RX

online pharmacy reviews no prescription online pharmacy mexicomedrx mexicomedrx

mexicomedrx legitimate online pharmacy mexicomedrx propecia online pharmacy

Clomid Hub: clomid without rx – cheap clomid

mexican pharmacies online https://mexicanxlpharmacy.shop/# mexicanxlpharmacy mexican online pharmacies

Relief Meds USA: 20 mg of prednisone – where to buy prednisone in canada

online pharmacy review online pharmacy no prescription online pharmacy school mexicanxlpharmacy

order Provigil without prescription wakefulness medication online no Rx order Provigil without prescription

anti-inflammatory steroids online: prednisone generic cost – order corticosteroids without prescription

no prescription online pharmacy https://mexicanxlpharmacy.shop/# online mexican pharmacy online pharmacy school

best mexican online pharmacy https://mexicanxlpharmacy.shop/# walmart pharmacy online walmart online pharmacy

Relief Meds USA: prednisone pill 20 mg – prednisone steroids

Relief Meds USA: anti-inflammatory steroids online – prednisone 40 mg price

Clomid Hub Pharmacy: Clomid Hub – Clomid Hub

online pharmacy forum mexicanxlpharmacy mexicanxlpharmacy mexicanxlpharmacy

prednisone 2.5 mg cost: prednisone 2 5 mg – best pharmacy prednisone

purchase prednisone 10mg: buy prednisone from canada – order corticosteroids without prescription

affordable Modafinil for cognitive enhancement Wake Meds RX buy Modafinil online USA

NeuroRelief Rx: contraindications gabapentin – gabapentin 300 mg capsule apo ingredients

best online pharmacy https://mexicanxlpharmacy.shop/# mexicanxlpharmacy mexicanxlpharmacy

NeuroRelief Rx: NeuroRelief Rx – does gabapentin affect blood glucose

cost of cheap clomid no prescription Clomid Hub Pharmacy can i purchase generic clomid without rx

Relief Meds USA: ReliefMeds USA – ReliefMeds USA

where to buy Modafinil legally in the US: Modafinil for ADHD and narcolepsy – nootropic Modafinil shipped to USA

http://wakemedsrx.com/# where to buy Modafinil legally in the US

Hello to every body, it’s my first pay a quick visit of this blog; this website contains remarkable and in fact excellent material in support of visitors.

https://cse.google.tm/url?q=https://cabseattle.com/

Wake Meds RX: nootropic Modafinil shipped to USA – Wake Meds RX

MexiCare Rx Hub: safe mexican online pharmacy – MexiCare Rx Hub

indian pharmacy online: indianpharmacy com – pharmacy website india

Curious about where can i watch casino?

Discover everything you need to know with our expert-approved guides.

We focus on fair gameplay, responsible gambling, and secure platforms you can trust.

Our recommendations include casinos with top-rated bonuses, excellent support, and real player reviews to help you get the most

out of every experience. Whether you’re new or experienced, we make sure you play smarter

with real chances to win and safe environments.

микрозайм онлайн микрозайм онлайн .

Online medicine home delivery IndiGenix Pharmacy buy medicines online in india

MexiCare Rx Hub: mexican drugstore online – medication from mexico pharmacy

каталог авто из кореи каталог авто из кореи .

https://canadrxnexus.shop/# CanadRx Nexus

Explore the topic of how to play craps at casino

and get insider insights that truly matter. We’re committed to fairness,

accuracy, and player-focused content. All our featured casinos follow strict compliance standards and

come loaded with generous sign-up offers and rewards.

Learn how to enjoy gambling safely while taking advantage of bonuses,

high-return games, and trusted services designed for both beginners and professionals alike.

IndiGenix Pharmacy: best india pharmacy – IndiGenix Pharmacy

best mail order pharmacy canada pharmacy com canada precription drugs from canada

MexiCare Rx Hub: medication from mexico pharmacy – MexiCare Rx Hub

Want to better understand don laughlin’s riverside resort hotel and casino?

You’ve come to the right place. We offer honest advice,

updated facts, and casino options that prioritize safety,

fairness, and massive rewards. Each platform we recommend is licensed,

secure, and offers bonuses that actually benefit the players.

From welcome packages to ongoing promos, we help you get more value while enjoying

fair play and exciting online gaming adventures.

Want to better understand how to play casino slot machines?

You’ve come to the right place. We offer honest advice,

updated facts, and casino options that prioritize safety,

fairness, and massive rewards. Each platform we recommend is licensed, secure, and offers bonuses that actually benefit the players.

From welcome packages to ongoing promos, we help you get more value while enjoying fair

play and exciting online gaming adventures.

IndiGenix Pharmacy: best online pharmacy india – best online pharmacy india

MexiCare Rx Hub MexiCare Rx Hub buy cialis from mexico

https://canadrxnexus.shop/# canadian pharmacy 24h com

legitimate online pharmacies india: cheapest online pharmacy india – buy prescription drugs from india

IndiGenix Pharmacy: IndiGenix Pharmacy – cheapest online pharmacy india

MexiCare Rx Hub MexiCare Rx Hub semaglutide mexico price

Online medicine order: india pharmacy – world pharmacy india

best online pharmacies in mexico: MexiCare Rx Hub – mexican mail order pharmacies

CanadRx Nexus: pharmacies in canada that ship to the us – canadianpharmacyworld com

MexiCare Rx Hub MexiCare Rx Hub MexiCare Rx Hub

Take a look https://nav.linl.ltd/pk/?jacob-and-co-casino

Online medicine home delivery: IndiGenix Pharmacy – Online medicine order

the canadian drugstore: CanadRx Nexus – canada pharmacy online

buy neurontin in mexico modafinil mexico online MexiCare Rx Hub

IndiGenix Pharmacy: world pharmacy india – reputable indian online pharmacy

MexiCare Rx Hub: buy kamagra oral jelly mexico – MexiCare Rx Hub

pharmacy online medicway medicway medicway

https://skillfullearning.ca/vn/?audiq8

pharmacy rx world canada CanadRx Nexus CanadRx Nexus

CanadRx Nexus: canadian pharmacy meds – canadian pharmacy ed medications

IndiGenix Pharmacy: top 10 pharmacies in india – IndiGenix Pharmacy

viagra online pharmacy pampharma pampharma pampharma

buy prescription drugs from india: IndiGenix Pharmacy – indianpharmacy com

pampharma https://pampharma.top/# pampharma pampharma

online canadian pharmacy review: pet meds without vet prescription canada – canadian pharmacy

indianpharmacy com: online shopping pharmacy india – Online medicine order

pharmacy online pampharma online pharmacy no prescription costco online pharmacy

mexico drug stores pharmacies: medicine in mexico pharmacies – MexiCare Rx Hub

best online pharmacy https://pampharma.top/# pampharma pampharma

MexiCare Rx Hub: isotretinoin from mexico – MexiCare Rx Hub

IndiGenix Pharmacy IndiGenix Pharmacy Online medicine home delivery

https://u8w.top/en/?crypto-casino-without-kyc

IndiGenix Pharmacy: IndiGenix Pharmacy – IndiGenix Pharmacy

IndiGenix Pharmacy: IndiGenix Pharmacy – indian pharmacy

CanadRx Nexus: CanadRx Nexus – cheapest pharmacy canada

canada drugs online reviews CanadRx Nexus CanadRx Nexus

batman138

MexiCare Rx Hub: MexiCare Rx Hub – mexican pharmacy for americans

isotretinoin from mexico buy modafinil from mexico no rx MexiCare Rx Hub

Thank you for the good writeup. It in fact was a amusement account it. Look advanced to more added agreeable from you! However, how could we communicate?

AsthmaFree Pharmacy: 140 lbs semaglutide 6 week belly ozempic weight loss before and after – AsthmaFree Pharmacy

affordable Zanaflex online pharmacy: RelaxMedsUSA – safe online source for Tizanidine

order ventolin online canada: ventolin for sale canada – AsthmaFree Pharmacy

ivermectin stock ticker: ivermectin pour on for cattle label – IverCare Pharmacy

pampharma https://pampharma.top/# online pharmacy no prescription best online pharmacy

AsthmaFree Pharmacy AsthmaFree Pharmacy AsthmaFree Pharmacy

pampharma https://pampharma.top/# pampharma propecia online pharmacy

ivermectinfix https://ivermectinfix.shop/# ivermectinfix stromectol online canada

affordable Zanaflex online pharmacy: buy Zanaflex online USA – muscle relaxants online no Rx

ventolin uk: buy cheap ventolin – AsthmaFree Pharmacy

ivermectin for chickens IverCare Pharmacy IverCare Pharmacy

ivermectinfix ivermectin over the counter uk ivermectin lotion for lice п»їwhere to buy stromectol online

what is compound semaglutide: rybelsus black box warning – does aetna cover semaglutide

IverCare Pharmacy: ivermectin soolantra – oral ivermectin for scabies

buy ivermectin cream for humans https://ivermectinfix.shop/# ivermectinfix ivermectinfix

ivermectinfix ivermectinfix online mexican pharmacy ivermectinfix

Когда говорят про азарт, часто забывают о комфорте. Но только не на официальный сайт водка казино. Здесь всё сделано так, чтобы ты не отвлекался от игры: меню простое, фильтры по жанрам и провайдерам работают чётко, бонусы под рукой. Я начал с минимального депозита и уже через день вышел в плюс. Не потому что повезло, а потому что всё по-честному. Турниры регулярные, конкуренция реальная, и призы действительно выплачиваются. Плюс ко всему — продвинутая статистика. Можно видеть, какие слоты приносят больше всего и когда. Это позволяет выстроить стратегию и играть с умом.

FluidCare Pharmacy: FluidCare Pharmacy – FluidCare Pharmacy

кредитные карты безработным без отказа кредитные карты безработным без отказа .

ivermectinfix https://ivermectinfix.shop/# where can i buy oral ivermectin purchase oral ivermectin

Bosstoto

lasix furosemide 40 mg: lasix – FluidCare Pharmacy

where can i buy ivermectin for cats IverCare Pharmacy IverCare Pharmacy

какие банки дают автокредит какие банки дают автокредит .

Tizanidine 2mg 4mg tablets for sale: RelaxMeds USA – Tizanidine tablets shipped to USA

semaglutide prescription online: semaglutide with insurance – rybelsus heartburn

lasix online FluidCare Pharmacy FluidCare Pharmacy

п»їrybelsus AsthmaFree Pharmacy AsthmaFree Pharmacy

order Tizanidine without prescription: Zanaflex medication fast delivery – Tizanidine 2mg 4mg tablets for sale

semaglutide weight loss first week AsthmaFree Pharmacy rybelsus pills for weight loss

Mariatogel

Mariatogel

AsthmaFree Pharmacy AsthmaFree Pharmacy do semaglutide tablets work

AsthmaFree Pharmacy: AsthmaFree Pharmacy – ventolin 200

trustmxpharma online mexican pharmacy trustmxpharma online mexican pharmacy

side effects for rybelsus can you split rybelsus tablets AsthmaFree Pharmacy

furosemida: lasix furosemide – furosemida

ivermectin for goat lice: IverCare Pharmacy – IverCare Pharmacy

togel4d login

AsthmaFree Pharmacy: buy ventolin online australia – ventolin price usa

ivermectin is effective against which organisms?: ivermectin side effects horses – IverCare Pharmacy

mexicomedrx https://clients1.google.ac/url?q=https%3A%2F%2Fmexicomedrx.shop online mexican pharmacy mexicomedrx

totojitu

goltogel login

order Tizanidine without prescription trusted pharmacy Zanaflex USA prescription-free muscle relaxants

semaglutide ingredients: AsthmaFree Pharmacy – AsthmaFree Pharmacy

IverCare Pharmacy: tractor supply ivermectin for dogs – ivermectin 1 cream generic

mexicomedrx mexicomedrx mexicomedrx mexicomedrx

FluidCare Pharmacy lasix 20 mg furosemida 40 mg

FluidCare Pharmacy: FluidCare Pharmacy – FluidCare Pharmacy

online pharmacy https://maps.google.co.jp/url?sa=t&url=https%3A%2F%2Fmexicomedrx.com mexicomedrx online pharmacy

rybelsus patient assistance program semaglutide cancer semaglutide doctor near me

Male Incontinence Bins

online pharmacy mexicomedrx mexicomedrx mexicomedrx

order Tizanidine without prescription affordable Zanaflex online pharmacy RelaxMedsUSA

AsthmaFree Pharmacy: AsthmaFree Pharmacy – why am i gaining weight on semaglutide

medicway https://www.google.je/url?sa=t&url=https%3A%2F%2Fmedicway.shop medicway medicway

tron bridge

RelaxMedsUSA prescription-free muscle relaxants Tizanidine tablets shipped to USA

swap tron anonymously

medicway medicway online mexican pharmacy medicway

Hand Sanitisers

USDT a efectivo Barcelona

medicway https://cse.google.ws/url?sa=t&url=https%3A%2F%2Fmedicway.shop online mexican pharmacy medicway

Jiliko login: maglaro ng Jiliko online sa Pilipinas – Jiliko slots

Withdraw cepat Beta138: Bandar bola resmi – Live casino Indonesia

Swerte99 app: Swerte99 casino walang deposit bonus para sa Pinoy – Swerte99 online gaming Pilipinas

Pinco kazino Pinco r?smi sayt Pinco casino mobil t?tbiq

Swerte99 login: Swerte99 bonus – Swerte99 login

medicway medicway medicway medicway

maglaro ng Jiliko online sa Pilipinas: Jiliko casino – Jiliko casino walang deposit bonus para sa Pinoy

online mexican pharmacy cvs online pharmacy online pharmacy cheap online pharmacy

Online gambling platform Jollibet: jollibet – Online gambling platform Jollibet

Onlayn kazino Az?rbaycan: Yeni az?rbaycan kazino sayt? – Onlayn kazino Az?rbaycan

bk8 login

mexrxdirect http://mexrxdirect.top/# mexica online pharmacy online mexican pharmacy

Qeydiyyat bonusu Pinco casino: Kazino bonuslar? 2025 Az?rbaycan – Kazino bonuslar? 2025 Az?rbaycan

GK88: Slot game d?i thu?ng – Cá cu?c tr?c tuy?n GK88

Jiliko slots: Jiliko casino – Jiliko casino

mexrxdirect mexrxdirect pet pharmacy online online pharmacy

jollibet login jollibet login jollibet

online pharmacy reviews online mexican pharmacy online pharmacy trusted online pharmacy reviews

jollibet login: Online betting Philippines – jollibet login

Ðang ký GK88: GK88 – GK88

Onlayn kazino Az?rbaycan: Pinco il? real pul qazan – Pinco casino mobil t?tbiq

Bonus new member 100% Beta138: Beta138 – Situs judi resmi berlisensi

Casino online GK88: Rut ti?n nhanh GK88 – Tro choi n? hu GK88