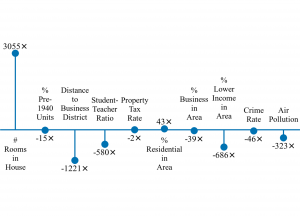

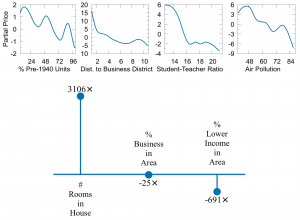

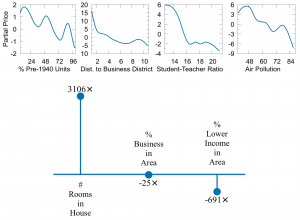

Explanations of artificial explanations can be simplified by controlling the number of variables, but the complexity in how they are visualized can still impede quick interpretation. We quantitatively modeled the cognitive load of machine learning model explanations as visual chunks and introduce Cognitive-GAM (COGAM) to balance between explanation accuracy and cognitive load.

Congratulations to team members Ashraf Abdul and collaborators Christopher von der Werth and Mohan Kankanhalli!

| Sparse Linear Models (sLM) | Cognitive-GAM (COGAM) | Generalized Additive Models (GAM) |

|

|

|

| Lowest Cognitive Load, but Lowest Accuracy |

Balances Cognitive Load and Accuracy by increasing accuracy with marginal increase in cognitive load |

Highest Cognitive Load, but Highest Accuracy |

NUS Computing Feature: https://www.comp.nus.edu.sg/news/features/3369-2020-brian-lim/

CHI 2020 Video Preview:

Recorded presentation at 2nd NUS Research Week:

Abdul, A., von der Weth, C., Kankanhalli, M., and Lim, B. Y. 2019. COGAM: Measuring and Moderating Cognitive Load in Machine Learning Model Explanations. In Proceedings of the international Conference on Human Factors in Computing Systems. CHI ’20.